CES 2.0: Measuring Customer Effort

Updated: Dec 16, 2025 Reading time ≈ 8 min

CES 2.0 is an updated version of the Customer Effort Score metric used to measure how easy it is for customers to resolve an issue or complete a task when dealing with a company.

The core CES 2.0 item is a single statement:

"The company made it easy for me to handle my issue."

Customers rate their agreement on a 7-point Likert Scale, where:

- 1 = "Strongly disagree" (very high effort)

- 7 = "Strongly agree" (very low effort)

This change was introduced after the original CES proved confusing: in early versions, a low number meant low effort, which many users intuitively perceived as "bad". CES 2.0 flips this logic: higher scores now clearly indicate a smoother experience.

CES 2.0 is part of the broader Customer Experience and VOC toolkit, alongside:

- CSAT – satisfaction with a specific interaction,

- NPS and mNPS – loyalty and recommendation intent,

- CSS and CSI – broader satisfaction indices,

- SUPR-Q, SUS and SEQ – usability and UX quality.

Where those metrics focus on outcomes and feelings, CES 2.0 focuses on effort: "How hard was this for the customer?"

You can implement CES 2.0 quickly using a ready-made Customer Effort survey template in tools like SurveyNinja, then integrate it into your support flows, product journeys, or post-transaction emails.

What CES 2.0 is Used For

CES 2.0 helps organizations understand how much effort customers must invest to get things done-whether that's resolving a problem, making a purchase, or using a specific feature.

1. Identifying friction in the customer journey

By asking customers right after key moments (support contacts, checkout, onboarding), CES 2.0 helps pinpoint:

- steps where users get stuck,

- repeated contacts or escalations,

- confusing interfaces or policies.

Combined with CJM (Customer Journey Mapping) and Gap Analysis, CES 2.0 highlights where the experience diverges most from "effortless".

2. Improving customer satisfaction and loyalty

Research shows that reducing effort is often more effective for loyalty than delight tactics. High effort can:

- drive up Churn Rate,

- reduce Customer Retention and Repurchase Rate,

- undermine high CSAT or NPS scores from other touchpoints.

Tracking CES 2.0 alongside CSAT, NPS and LTV gives a more complete picture of how effort affects long-term value.

3. Optimizing support and service operations

CES 2.0 is often paired with operational metrics like:

- FCR (First Contact Resolution),

- FRT (First Response Time),

- TTR (Time to Resolution).

If FCR is high but CES is low, customers may be getting their issues "resolved" but in a way that feels frustrating or complex. That's a signal to streamline processes, not just hit SLAs.

4. Supporting product and UX decisions

CES 2.0 responses can be tied back to:

- specific features, flows, or channels,

- AB tests in experimental research with random assignment,

- UX metrics like SUS, SUPR-Q, UMUX, UEQ, or VAS.

This makes CES 2.0 a practical link between service-oriented CX and product/UX work.

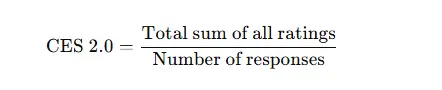

How the CES 2.0 Metric is Calculated

CES 2.0 is intentionally simple to calculate. Respondents rate their agreement with the CES 2.0 statement (or a close variant) on a 1–7 scale:

"The company made it easy for me to handle my issue."

The core formula is:

Example

Suppose five customers give these ratings: 5, 6, 7, 4, 6.

- Sum of ratings:

5+6+7+4+6=285 + 6 + 7 + 4 + 6 = 285+6+7+4+6=28 - Divide by number of responses:

28/5=5.628 / 5 = 5.628/5=5.6

So the CES 2.0 score is 5.6.

Interpretation:

- Closer to 7 – Customers strongly agree that it was easy to handle their issue (low effort).

- Closer to 1 – Customers strongly disagree and likely experienced high effort or frustration.

In more advanced setups, companies might:

- compute confidence intervals around CES 2.0 using Quantitative Research methods,

- apply Weighted Survey techniques when some customer segments are over/underrepresented,

- use cross-tabulation or Time Series Analysis to explore trends and segment differences.

General CES 2.0 Survey Methodology

A good CES 2.0 program is less about a single question and more about where and how you ask it.

1. Define the interaction you want to measure

Decide which touchpoint you're interested in:

- support interaction (ticket, chat, call),

- purchase or checkout process,

- onboarding or activation journey,

- account changes, cancellations, or returns.

Link the CES question to a specific event so responses are meaningful.

2. Formulate clear CES 2.0 statements

The standard statement is:

"The company made it easy for me to handle my issue."

You can adapt wording slightly to fit context (e.g., "to place my order", "to change my plan"), but keep the structure focused on ease, not satisfaction or happiness-that's what CSAT is for.

3. Ask immediately after the interaction

To capture fresh impressions:

- trigger CES 2.0 right after a chat ends, ticket closes, or purchase completes,

- embed it in transactional emails, in-app prompts, or SMS,

- use Pulse Survey logic for frequent, light-touch measurement.

For larger programs, CES 2.0 can be part of cross-sectional surveys or panel studies, as long as recall bias is considered.

4. Collect a representative sample

Ensure your respondents reflect the real customer base:

- monitor volumes by region, segment, device, and channel,

- use Weighted Survey techniques if some groups are underrepresented,

- avoid only sampling "easy" cases (e.g., only resolved tickets).

This matters especially when CES 2.0 results feed into broader Predictive Analysis or KPI dashboards.

5. Analyze results and identify patterns

Beyond the average score:

- segment by product, channel, issue type, agent, or journey step,

- use cross-tabulation to see which segments struggle most,

- track trends over time using time series analysis,

- combine CES 2.0 with CSAT, NPS, Repurchase Rate, Churn Rate, and operational metrics (FCR, TTR).

Include at least one open-ended question ("What made this easy/difficult?") and apply Qualitative Analysis or Sentiment Analysis to understand root causes.

6. Turn insights into improvements

Use CES 2.0 data to prioritize:

- process simplification,

- UX changes,

- automation and self-service,

- support training and scripts.

Then rerun CES 2.0 and compare waves to see if changes worked.

What is Considered a Good CES 2.0 Score?

On the 1–7 CES 2.0 scale, higher values indicate that customers perceive the experience as easy and low-effort. While exact benchmarks vary by industry and context, a rough interpretation looks like this:

- Above 5 – Generally good: most customers found the interaction easy.

- Around 4–5 – Mid-range: acceptable, but there are noticeable friction points that need attention.

- Below 4 – Problematic: customers perceive interactions as effortful and difficult, calling for immediate investigation and improvement.

Because CES 2.0 is context-dependent, it's best to:

- compare your score with your own historical data,

- benchmark against similar products or channels,

- interpret CES alongside CSAT, NPS, CSS, CSI, ACSI, and behavioral metrics like Churn Rate, Customer Retention and LTV.

The real value comes from trends and segment comparisons, not just a single "good or bad" number.

How to Improve the CES 2.0 Metric

Improving CES 2.0 means systematically making it easier for customers to achieve their goals with minimal friction.

Here are practical strategies:

1. Simplify journeys and interfaces

- streamline key flows (checkout, returns, account changes),

- reduce the number of steps and required fields,

- fix confusing labels and navigation,

- remove dead ends and unnecessary redirects.

Measure impact with SUS, SUPR-Q, SEQ and follow-up CES 2.0 waves.

2. Expand and optimize support channels

- offer multiple channels: live chat, phone, email, social, in-app messaging,

- ensure consistency across channels,

- route queries smartly so customers don't repeat themselves.

Track CES 2.0 by channel to see which ones truly feel "easy" from the customer side.

3. Invest in self-service

- build a clear, searchable knowledge base and FAQ,

- add contextual help, tooltips, and walkthroughs in the product,

- use chatbots for simple, repetitive queries, escalating smoothly to humans when needed.

Self-service done well reduces effort and operational load-both of which tend to improve CES 2.0 and related metrics.

4. Use feedback loops and continuous improvement

- regularly analyze CES 2.0, CSAT and NPS responses,

- cluster open comments with sentiment analysis,

- run experimental research (A/B tests) on proposed changes.

This creates a cycle: measure effort → fix friction → re-measure → iterate.

5. Train and empower frontline teams

- give agents clear processes and knowledge,

- empower them to solve issues in one interaction (improving FCR and CES simultaneously),

- share CES 2.0 and VOC insights with teams so they see the impact of their work.

Tie improvements in CES 2.0 and related KPIs to recognition or goals (carefully, to avoid gaming).

6. Proactively prevent issues

Where possible:

- communicate clearly before problems arise (e.g., delays, outages, policy changes),

- offer guided flows and recommendations inside the product,

- anticipate common mistakes and design them out of the system.

The easiest interaction is the one the customer never has to initiate-this philosophy strongly supports high CES 2.0 scores.

CES 2.0 turns a vague question - "Was this interaction painful?"- into a simple, trackable metric for customer effort.

Combined with tools like SurveyNinja for data collection and CX analytics for segmentation and trend analysis, CES 2.0 helps teams systematically remove friction from the customer journey, improving satisfaction, loyalty, and long-term business outcomes.

Updated: Dec 16, 2025 Published: Jun 2, 2025

Mike Taylor

Mike Taylor